To determine whether a viewer liked a piece of media and would like to see it again, researchers have traditionally relied on self-reported surveys on a panel of participants. In this researcher conducted on the data which has since been released as the Affectiva-MIT Facial Expression Dataset (AM-FED), researchers used automated methods to classify individual “liking” and “desire to view again”. While still a modest dataset, this work shows that we can obtain data similar to that obtained through intrusive surveys simply by observing facial expressions of viewers.

Abstract

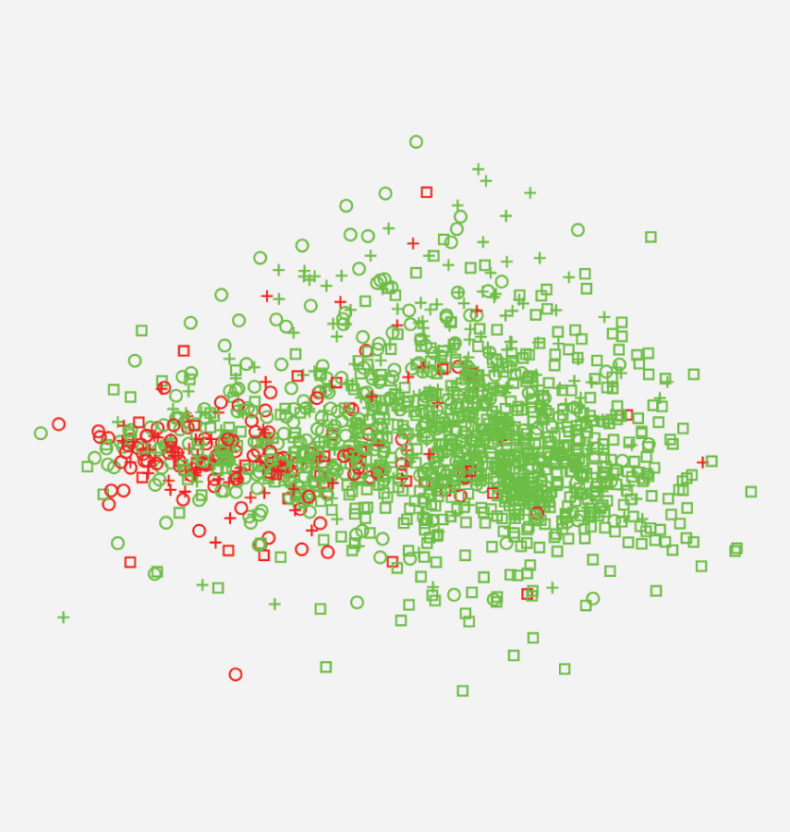

We present an automated method for classifying “liking” and “desire to view again” based on over 1,500 facial responses to media collected over the Internet. This is a very challenging pattern recognition problem that involves robust detection of smile intensities in uncontrolled settings and classification of naturalistic and spontaneous temporal data with large individual differences. We examine the manifold of responses and analyze the false positives and false negatives that result from classification. The results demonstrate the possibility for an ecologically valid, unobtrusive, evaluation of commercial “liking” and “desire to view again”, strong predictors of marketing success, based only on facial responses. The area under the curve for the best “liking” and “desire to view again” classifiers was 0.8 and 0.78 respectively when using a challenging leave-one-commercial-out testing regime. The technique could be employed in personalizing video ads that are presented to people whilst they view programming over the Internet or in copy testing of ads to unobtrusively quantify effectiveness.